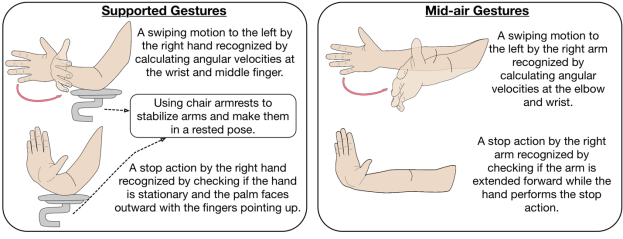

This week, I have been working on implementing a new gesture in my pipeline. I have been trying to implement the swipe left, swipe right gesture.

initially, I thought that the “swipe-left” and “swipe-right” gestures would be consisted of “palm” + “inverted_palm”. In order to achiece that, I had to create 4 new folders:

- palm, rotated 90 degrees clockwise

- palm, rotated 90 degrees counterclockwise

- inverted_palm, rotated 90 degrees clockwise

- inverted_palm, rotated 90 degrees counterclockwise

In order to achieve the rotation of the palm, I augmented the initial palm folder:

# Apply rotations

swipe_left = cv2.rotate(img_rgb, cv2.ROTATE_90_CLOCKWISE)

swipe_right = cv2.rotate(img_rgb, cv2.ROTATE_90_COUNTERCLOCKWISE)

After applying the appropriate rotation, these are the final folders:

After that, I tried to retrain my model with new classes. But, I came across an error after I tested it with the camera. All of the new classes were misclassified as palm. I then realized why that happened: since I train my model on the hand landmarks, all of the positions of palm, no matter which side it is facing were landmarks of a palm. That is why, I had to choose the approach in order to overcome this problem.

I then tried a hybrid model which takes both landmark sequences and grayscale image sequences as input, processes them through two branches (LSTM + CNN), merges them, and outputs 12 gesture classes.

Input shapes:

# Landmark input: (5, 63) -> 5 frames, 21 landmarks, 3 coords each

# Image input: (5, 128, 128, 1) -> 5 grayscale hand crops

Uses TimeDistributed Conv2D, MaxPooling, and Flatten, transforming each frame independently. Then, sequences are passed to an LSTM layer to model temporal dependencies across the 5 frames.

After training this hybrid model, I visualised a confusion matrix:

As it is evident, the approach did not help to solve the misclassification problem.

After that, I tried to use delta landmarks instead of just landmarks. Delta landmarks represent the frame-to-frame changes in hand keypoint positions, effectively capturing motion vectors rather than static hand poses.

To capture the hand’s orientation, additional features are computed:

- Wrist to Middle Finger Vector: Represents the direction from the wrist to the middle finger, indicating the hand’s pointing direction.

- Index to Pinky Vector: Captures the lateral spread of the hand, useful for distinguishing between gestures like a closed fist and an open palm.

- Palm Normal Vector: Obtained by computing the cross product of vectors from the wrist to the index and pinky fingers, this vector is perpendicular to the palm plane and indicates the palm’s facing direction.

After integrating these metrics into the preprocessing pipeline, I trained an LSTM model and these were the results:

It turns out that the extracting more features from each hand helped the model to properly classify the gestures for swipe-left, swipe-right dynamic gestures

Leave a Reply