I am in the process of refining my thesis, editing the references, adjusting the word count, filling in missing parts. This week, I have been working on creating 2 user personas based on my beneficiaries section. I used Figma for the diagrams.

In the bio of every persona, I included parts of fake scenarios, which demonstrates how and what is the purpose of their interaction with my product.

Besides creating the user personas and writing the “result evaluation, limitations and future enhancements”, I have also deployed the project on github.

https://github.com/alkiki/grad_project

I have created the instructions for user to be able to run the project on their machine.

These instructions include the txt file with all dependancies that are supposed to be downloaded and their versions.

Currently, this version of the project can only be run on MacOs devices. On windows, there are several dependancies compatibility issues, that I did not manage to resolve.

Currently, the project contains 3 scripts that have to run in 2 different environment. The mediapipe_env is for the camera script and the camenv environment is for the websocket server and app scripts. The user has to run 3 scripts simultaneously in 3 split terminals.

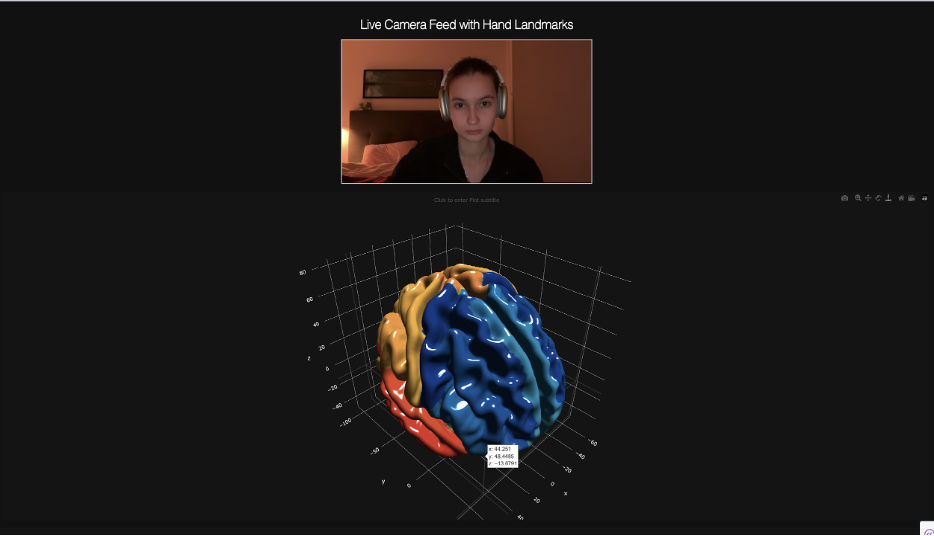

Later that week, I managed to make the camera run in real-time in the website, making the user interface less agglomerated.

Besides changing the UI, I also did the Heuristic evaluation of y product to identify which enhancements are needed for future improvements: https://www.figma.com/design/8WRuNMaj1xEn1GTje3Swln/Heuristic-Evaluation-Template–Community-?node-id=0-1&m=dev&t=Ah00mFRDTEG6vGzz-1. One of the main concerns is absence of instructions beforehand, no clear examples of set of gestures that can be used.

Regarding more general improvements, I would want to experiments with the data visualisation itself and create it myself based on the dataset I find interesting. Exploring alternative training methods could further enhance performance: semi-supervised learning would leverage unlabelled data to reduce annotation effort, reinforcement learning could adapt the model through trial-and-error feedback, and a personalisation phase, where the system fine-tunes to each user’s hand shape and motion patterns, would improve individual accuracy.

Leave a Reply